Vou tentar fazer um (breve?) relato sobre o Fedora Activity Day (ou simplesmente FAD), que aconteceu em São Paulo no dia 1 de Junho de 2013, mais conhecido como sábado retrasado :-). Se quiser ver a página de organização do evento (em inglês), clique neste link aqui.

Chegada em Sampa

Bem, como sou um ex-embaixador do Fedora novato, inexperiente, e que não faz nada da vida (ao contrário de vários ex-colegas que participam há anos como embaixadores contribuindo solidamente para o bem comum e sem deixar a peteca cair), eu resolvi levar os DVDs do Fedora que estavam comigo para que o Leo e o Itamar (e quem mais estivesse por lá!) pudessem se encarregar de redistribuí-los antes que eles perdessem a “validade”. Saí cedo de Campinas, e com uma São Paulo sem trânsito nem problemas, consegui chegar no escritório da Red Hat às 9h e pouco.

Conheci (e reconheci!) algumas pessoas por lá, entre colegas de trabalho da empresa, embaixadores/contribuidores do Fedora, e entusiastas que estavam lá pra conhecer melhor e ver qual era a do evento. Certamente foi uma tarde/noite proveitosa em termos de contatos pessoais!

Palestras

Depois de um atraso no início do evento, o Leo começou apresentando uma palestra sobre o projeto Fedora (e seus sub-projetos, como o de embaixadores, por exemplo). Mesmo com boa parte (senão todos!) dos presentes já fazendo parte do projeto de algum jeito, ainda assim a palestra foi um momento legal pra que algumas discussões e reflexões acontecessem. Considero que a maior parte da “nata” da comunidade estava naquela sala (com óbvias exceções como o Fábio Olivé, o Amador Pahim, e outras pessoas cujos nomes não vou ficar citando porque estou com preguiça de pensar em todos!). Portanto, acho que o plano do Leo (que é o de revitalizar a comunidade Fedora no Brasil, principalmente a de embaixadores) começou com os dois pés direitos (se é que isso é possível!).

A idéia inicial era de que cada palestra durasse 1 hora, mas é claro que com tanto assunto pra tratar a palestra do Leo durou muito mais que isso! No fim das contas, quando a palestra terminou já era hora do almoço :-). Como não poderia deixar de ser, o papo continou na cozinha, e foi lá que pude conhecer melhor o pessoal que estava presente. Foi bem legal :-).

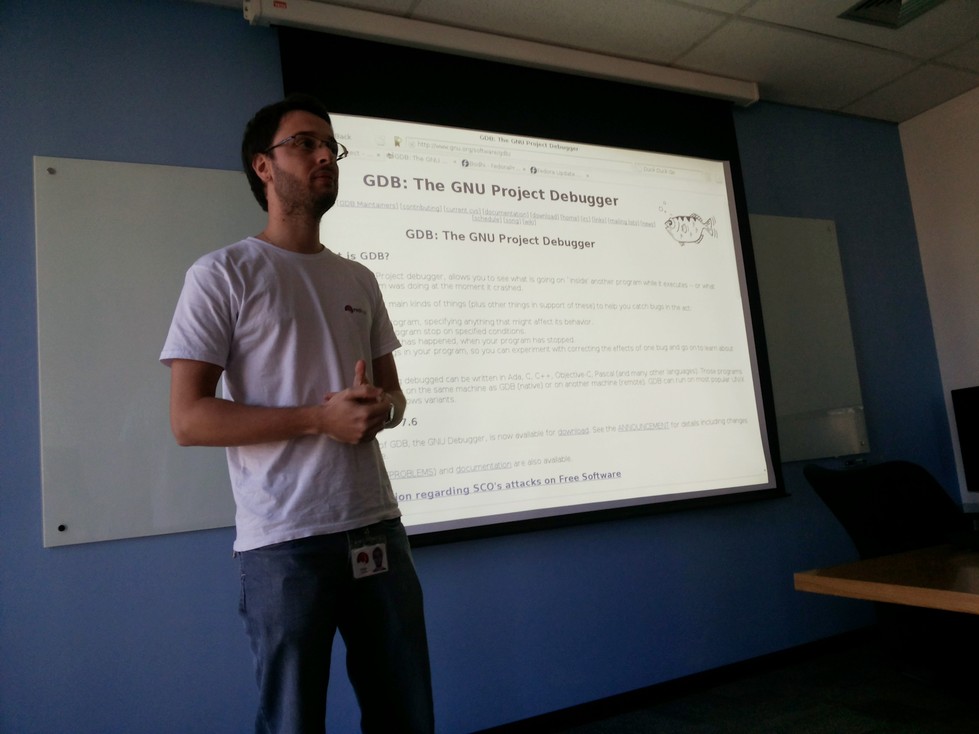

Bem, com a bateria carregada, era hora do segundo ciclo de palestras! O Leo pediu pra que eu apresentasse um pouco da minha experiência com o GDB, tanto na parte de lidar com a comunidade upstream, quanto na hora de focar no desenvolvimento de funcionalidades para o Fedora (ou para o Red Hat Enterprise (GNU/)Linux). Eu não tinha preparado nenhum slide, e fui com a cara (de pau) e a coragem tentar bater um papo com a galera ;-). Aqui está uma foto na hora da palestra (reparem na pose, no garbo e na elegância do palestrante):

Acho que consegui passar uma idéia de como é o meu dia-a-dia trabalhando com o GDB e navegando entre os mares upstream e empresarial. Algumas pessoas fizeram algumas perguntas (o Maurício Teixeira inclusive fez perguntas técnicas!), e felizmente minha palestra durou bem menos do que a do Leo! Eu certamente não tinha tanto assunto pra tratar :-P.

A última atividade do dia foi um hands-on que o Itamar fez sobre empacotamento RPM. Foi legal, e acho que deu pro pessoal ter uma noção de que empacotar pro Fedora não é um bicho de sete cabeças. Inclusive, se você estiver interessado em saber mais, sugiro que dê uma olhada na página wiki que ensina o básico disso, e não se sinta envergonhado de enviar suas dúvidas pras listas de desenvolvimento do Fedora!

Após esse how-to ao vivo, e levando em conta o horário avançado (mais de 19h) e o cansaço do pessoal, decidimos finalizar o evento. Na verdade, ainda ficamos discutindo bastante sobre vários pontos importantes da comunidade, os problemas vivenciados (sim, existem problemas, a não ser que você viva num mundo encantado ou não se envolva o suficiente pra notá-los, mas aí é só pedir pra alguém traduzir o que está acontecendo e talvez você entenda), e as possíveis soluções. Acabei saindo de Sampa quase 20h30min, mas achei que valeu muito a pena ter ido!

Conclusões

A conclusão pessoal é que eu estava mesmo precisando ir a eventos e conhecer pessoas novas! Acho isso muito legal, é um combustível pra fazer mais coisas e ter mais idéias.

A conclusão na parte da comunidade é a de que o Leo vai conseguindo aos poucos mudar a mentalidade do Fedora Brasil. Não me arrependo de ter dado um tempo no sub-projeto de embaixadores, e estou achando muito legal ver as ações do Leo & cia. para mudar as coisas. Têm meu total apoio!

Agradecimentos

Esse evento certamente não teria acontecido sem o incansável Leonardo Vaz. Ele merece todos os agradecimentos e toda a admiração da comunidade (inter)nacional do Fedora por isso, sem dúvida. Se você estiver lendo este post, tiver alguma relação com o Fedora, e for ao FISL este ano, pague uma cerveja (ou suco!) a ele, porque ele merece.

Também queria agradecer ao pessoal que foi ao evento. É sempre bom ver gente que se preocupa de verdade em melhorar algo, que não fecha os olhos para os problemas que estão acontecendo, e principalmente que se dispõe a aprender algo novo. Foi gratificante ter conhecido pessoas como o Germán, um astrofísico argentino que mantém dois pacotes em Python no Fedora sem querer nada em troca! Ou tipo o Hugo Cisneiros, envolvido no mundo GNU/Linux há tanto tempo quanto aquele cabelo dele levou pra crescer :-P.

E vida longa ao Software Livre!

]]>